So, not all that long ago, we were profiling our application, and I noticed that a particular part of our system was consuming a huge amount of time sorting arrays of 300,000 to 1M items. I was a little surprised to see that this was taking an inordinate amount of time but it shouldn’t have been so surprising to me, since the keys by which we were sorting these arrays are somewhat complex and so comparisons are nontrivial.

The arrays we were sorting were lists of keys (and their associated data), and we were sorting them in a lexicographic ordering that was based on an elsewhere defined sort order (that was defined by the user). The keys are themselves a list of id-value pairs where the ‘id’ is a short integer, and the value is a string. So, as an example, consider the problem of sorting the following list in lexicographic order by the key ids 3, 4, 0 (in that order):

- (0 = “UL1”, 3 = “M”, 4 = “S”)

- (3 = “F”, 0 = “UL4”, 4 = “S”)

- (4 = “S”, 3 = “M”, 0 = “UL2”)

- (4 = “N”, 3 = “F”)

When looking at the data, a few things stand out. Firstly, the keys aren’t all in the same “internal order”. This is because the keys come from various different places in the system and are created at various different times, and are not sorted because the cost of doing so proved to be a performance issue. Secondly, not all of the keys have all of the key ids (0, 3, and 4). This is a feature of our system where not all keys require all ids. Keys cannot overlap, but a missing ‘id’ is a wildcard, and allows searches to match that key with any value for that id.

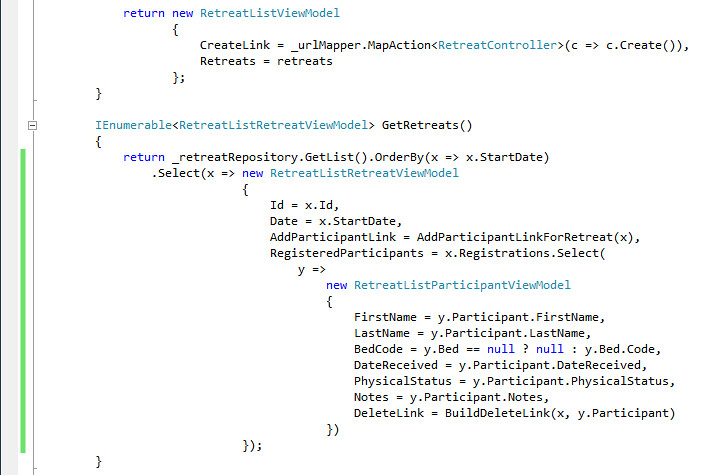

When doing a comparison between these keys, we were using a IComparer implementation that looks like the following code snippet. The IKeyInstance interface is the interface for the objects that contain one of the rows of the above list of data. GetValue returns the string associated with the id passed as its argument (or null if the value doesn’t exist in the key).

1: public class KeyInstanceSortedComparer : IComparer<IKeyInstance>

2: { 3: private readonly short[] _KeyIdSortOrder;

4:

5: public KeyInstanceSortedComparer(IEnumerable<short> keyIdSortOrder)

6: { 7: _KeyIdSortOrder = keyIdSortOrder.ToArray();

8: }

9:

10: public int Compare(IKeyInstance keyInstanceA, IKeyInstance keyInstanceB)

11: { 12: foreach (var keyId in _KeyIdSortOrder)

13: { 14: var keyValueA = keyInstanceA.GetValue(keyId);

15: var keyValueB = keyInstanceB.GetValue(keyId);

16:

17: var compareResult = string.Compare(

18: keyValueA.ToValue(),

19: keyValueB.ToValue());

20: if (compareResult != 0)

21: return compareResult;

22: }

23: return 0;

24: }

25: }

The _KeyIdSortOrder field is the sort order for the particular sort operation we are performing when using this comparer. The ToValue method is an extension method that returns the value’s string if it’s non-null, or null otherwise. It’s pretty easy to see from this snippet that this code implements a lexicographic sort on the IKeyInstance implementers.

Now, given the fact that the IKeyInstance implementers did not sort their id-value pairs internally, GetValue has to do a linear search of the key instance in order to find the appropriate value. Additionally, the comparator must compare as many keyId’s values as it encounters until it either reaches the end of the list of id’s to compare, or it finds an id for which the two keys have different values. The keys are never larger than about 25 ids in any key, and they are typically more like 2 – 8 id-value pairs per key. Also, much of the memory consumed in the runtime footprint of our application was at one point held by these IKeyInstance implementers or their associated id-value pairs, so every effort to keep their size down must be made.

One of the optimizations we made to reduce the size of these key instances was to manufacture the strings used as “values” for the id-value pairs from a factory that coalesced duplicates so that we only ever had one instance of a given value in memory at any point in time. Another optimization was to do the same with id-value pairs themselves. These two combined optimizations had a profound impact on the memory footprint of our application, due to the amount of duplication present in the data, and the limited amount of uniqueness in the key’s values and id-value pairs.

Anyway, to get back to the sorting, I spent some time looking at the sort performance and realized that no matter what I did, I couldn’t really improve the performance of the sort much, so long as we were continuing to do all these comparisons. The problem is, the comparisons are simply expensive due to the nature of GetValue in IKeyInstance and the fact that we weren’t sorting the entries. We could have used Dictionary in the key instances, but that would have taken up too much memory for our taste. We could have used a sorted list of id/value pairs, but when there are only typically 2-8 columns in the key, binary search would likely only be slightly faster than a linear search, and may have even been slower.

So…. I started looking for ways to parallelize the algorithm. One of the other developers on my team – Robert Ream – was looking into parallel implementations of quicksort and mergesort to try to figure out how we could improve the performance of our sorting, since we have a few processors at our disposal on our typical users’ machines. I also asked him to look into ways of making the algorithm cancellable and restartable so that we could ‘stop’ the algorithm in progress, serialize its state to disk, and move on to another item in the UI without losing the work that had already been done. While this was a very interesting problem to solve, it turned out that trying to get rid of the comparisons would bear much more tasty fruit.

Thinking outside the box

In the process of looking for ways to reduce the number of comparisons, I started talking to Robert about which of the algorithms we knew about had less comparisons (mergesort / quicksort / etc.). After some discussion, we really didn’t end up anywhere useful, so Robert continued working on his parallel quicksort implementation. In the meantime, the weekend came, and I decided to spend a couple hours researching what else could be done. I pulled out Knuth’s TAOCP Vol 3 and started reading. In it, I came across his description of radix sort. I read his description, which didn’t lead me to any great revelations (since he basically said that it doesn’t typically perform that much better than the other algorithms), but I was intrigued by the fact that it didn’t use comparisons.

I then continued searching for information, reading Cormen, and a couple of other algorithm books I have around the house, and then did some searching online. I came across several publications by Stefan Nilsson on the subject of radix sort, and read some of them. It turns out that after reading a few of these I started to think about our problem a bit more and try to consider whether it could be framed in a structure that allowed us to apply radix sort. Still, the complete lack of comparisons was the motivating factor for me, given my knowledge of the fact that the comparisons were more than 95% of the time spent in our sorting code.

After a few hours of thought on the subject on Friday, I decided Saturday afternoon to investigate a bit in code. So, if you don’t have any background on radix sort, the basic idea is this – instead of using comparisons, you must have numerical values that can be assigned to the ‘values’ of your key elements being sorted, and the assignment must be in the same order as your sort order. For instance, if you want to sort a bunch of strings, the values are the ASCII values of the characters (or at least their ASCII sort collation order number). Radix sort takes advantage of this fixed space to allow you to do array assignments and simple linked list operations instead of doing comparisons. Radix sort performs best if you have a large ‘alphabet’ of values to work with and your keys are either non-overlapping, or don’t have a very large number of duplicates.

There are several variations of radix sort algorithms - the two classifications are MSD and LSD approaches. I used the MSD approach since it was easier to understand how it applied to my problem, and I originally thought I had varying length keys so it seemed like it would be more appropriate (LSD approach seems to work better with fixed-length keys).

In order to apply radix sort to my problem, I needed to be able to assign a value to each key-value in my key. Since I already had a factory that maintained the invariant that there was only one instance of each key-value in memory at any given point in time, and in order to do this, the factory needed to track these objects, I decided to provide the ability for the factory to assign a ‘sort order’ value to each of the key-values. An integer was big enough, and I could put some intelligence in the caching factory to have it only perform the sort when requested AND necessary (due to a change having occurred to the list of key-values).

Given these sort order values, I could define an “alphabet” of 0..N where N is the number of values in existence and the highest sort order value, 1 is the lowest, and 0 represents a missing id in the key. Then, I could logically think of my keys as looking like ordered M-tuples of integers (where M is the number of IDs in my sort order specification, and the maximum number of id-value pairs to be found in any key). The integers in these M-tuples were the sort order values from the key-value list, and the tuples were logically sorted in the appropriate order for doing the lexicographic sort.

Then, the keys above could (fictitiously) look something like (in the sort order 3, 4, 0):

- (2, 4, 5) //(0 = “UL1”, 3 = “M”, 4 = “S”)

- (1, 4, 7) //(3 = “F”, 0 = “UL4”, 4 = “S”)

- (2, 4, 6) //(4 = “S”, 3 = “M”, 0 = “UL2”)

- (1, 3, 0) //(4 = “N”, 3 = “F”)

with the sort order values being:

(1 = F, 2 = M, 3 = N, 4 = S, 5 = UL1, 6 = UL2, 7 = UL4)

and the prescribed sort order is, of course

- (1, 3, 0)

- (1, 4, 7)

- (2, 4, 5)

- (2, 4, 6)

Implementation of Radix Sort

So, armed with this approach of assigning ordinal positions to the values in my keys, and the logical definition of a key as given above, I was in a position to apply radix sort (which requires a desired lexicographic ordering and known ‘cardinal’ values for the letters of the alphabet you are sorting). My implementation breaks into three pieces – an ‘inner’ algorithm (in my case bucketsort), a sorter, and a way to track the unfinished portions of the array being sorted.

The basic idea of my implementation is that you start with the full array as a single ‘unsorted’ range. The algorithm loops as long as there is work to do, by removing a single unsorted range from a queue, processing that unsorted range, and then moving on to the next step. Once there is no more work to do, the array is sorted.

The algorithm requires an ‘inner’ sort algorithm, since radix sort processes one ‘digit’ at a time from the source alphabet. In my case, radix sort will process one key-value column at a time, starting from left to right. When processing a column, it will use a working array that has one linked list for each possible value of the digit. For instance, we would have a working array (of linked lists) of length 8 (since there are 7 distinct key values, plus one ‘missing’ key value). The sort will walk linearly through the list putting items into the ‘bucket’ that corresponds to the value for the first column. Then, after it has assigned all items to their appropriate buckets, it will put those items back into the array in the order in which they appear in the array and their linked list. At this point, the array is sorted by the first column, and the work remaining to be processed is the list of buckets of length 2 or more. The process is iteratively applied to each column, ignoring the values of previous columns, but when applying for a column, it is only applied to the subset being currently processed (i.e. after splitting buckets 1 and 2, the ‘remaining work’ will be the ranges (0, 2) and (2, 2), where the tuples are (start, length).

The work of splitting items into their buckets is mostly done by my ‘RadixSortBucketer’ class, given here:

1: public class RadixSortBucketer

2: { 3: private readonly LinkedList<int>[] _Buckets;

4:

5: public RadixSortBucketer(int bucketCount)

6: { 7: _Buckets = new LinkedList<int>[bucketCount];

8: }

9:

10: public void AddItem(int bucket, int item)

11: { 12: var list = _Buckets[bucket];

13: if(list == null)

14: _Buckets[bucket] = list = new LinkedList<int>();

15: list.AddLast(item);

16: }

17:

18: public void SaveItemsTo(

19: LinkedList<RadixSortUnfinishedRange> unsortedRanges,

20: int[] workArray,

21: int startOffset)

22: { 23: var targetIndex = startOffset;

24: foreach(var list in _Buckets.Where(b => b != null))

25: { 26: if(list.Count > 1)

27: unsortedRanges.AddLast(

28: new RadixSortUnfinishedRange(

29: targetIndex,

30: list.Count));

31: foreach(var item in list)

32: workArray[targetIndex++] = item;

33: }

34: }

35:

36: public void Clear()

37: { 38: for(var i = 0; i < _Buckets.Length; i++)

39: _Buckets[i] = null;

40: }

41: }

The array of LinkedList<int> is the buckets, the LinkedList<int> in each bucket is (possibly empty) list of indices that fall into that bucket. The unsorted range object looks like:

1: public class RadixSortUnfinishedRange

2: { 3: public readonly int Start;

4: public readonly int Count;

5:

6: public RadixSortUnfinishedRange(int start, int count)

7: { 8: Start = start;

9: Count = count;

10: }

11: }

and the overall algorithm looks like:

1: public class RadixSorter

2: { 3: public delegate int ValueFunctionDelegate(int level, int item);

4:

5: private readonly RadixSortBucketer _Bucketer;

6: private readonly int[] _WorkArray;

7: private readonly ValueFunctionDelegate _ValueFunction;

8: private readonly int _LevelCount;

9:

10: public RadixSorter(int[] arrayToSort, int bucketCount,

11: int levelCount, ValueFunctionDelegate valueFunction)

12: { 13: _WorkArray = arrayToSort;

14: _Bucketer = new RadixSortBucketer(bucketCount);

15: _LevelCount = levelCount;

16: _ValueFunction = valueFunction;

17: }

18:

19: public void Sort()

20: { 21: var currentLevel = 0;

22: var currentUnsortedRanges = new LinkedList<RadixSortUnfinishedRange>();

23: currentUnsortedRanges.AddLast(

24: new RadixSortUnfinishedRange(0, _WorkArray.Length));

25: while(currentUnsortedRanges.Count > 0)

26: { 27: var nextUnsortedRanges = new LinkedList<RadixSortUnfinishedRange>();

28: foreach(var range in currentUnsortedRanges)

29: { 30: _Bucketer.Clear();

31:

32: for(int i = range.Start, j = 0; j < range.Count; i++,j++)

33: _Bucketer.AddItem(

34: _ValueFunction(currentLevel, _WorkArray[i]),

35: _WorkArray[i]);

36:

37: _Bucketer.SaveItemsTo(

38: nextUnsortedRanges,

39: _WorkArray,

40: range.Start);

41: }

42:

43: currentUnsortedRanges = nextUnsortedRanges;

44: currentLevel++;

45: if(currentLevel >= _LevelCount)

46: break;

47: }

48: }

49: }

The only ‘special’ part of this is that I have defined a delegate to allow this to be parameterized. The delegate is a value function that returns the integral value corresponding to a particular item and ‘level’ (level is like the ‘current column’ value). In my particular problem, the value function is a delegate that uses the ‘level’ to determine which ‘value’ to ask for, and returns 0 if there is no value, or the sort order of the value if the value is not null. Item is the index into the original array to use when getting values for the algorithm.

Conclusion?

So, what was the end result of my experimentation, you ask? Well, the sort that used to take 17 seconds or so, now takes under 1 second, and much less if it doesn’t include the cost of assigning the sort orders to the key values (which doesn’t happen for each sort, it only happens for the first sort after a change to the key-value cache – which is pretty infrequent). Could my implementation be more efficient – absolutely. However, I’m a strong believer in “write it the way that makes the most sense to you, and then only make it ‘more efficient’ if profiling shows it to need to be more efficient” – this is basically the philosophy of avoiding ‘premature optimization’. Of course, I don’t condone ‘premature pessimisation’ either, so you shouldn’t choose the wrong algorithm or data structure for the job, but you also shouldn’t micro-optimize every bit of code you write, as you’re writing it.

I hope you find this description useful – I tried to give details about how & why I did what I did, instead of just ‘what’ I did. Hopefully that makes this an interesting read and provides some insights that just posting the code wouldn’t provide.